At the beginning of the 20th century, around 120 years ago, the scientific community was certain it had cracked the code of the universe. Newton’s laws explained how objects moved, from the infamous apple to the heavenly bodies, and light was understood to behave like ripples on the River Cam. Physics was complete and everything made sense… except for a few puzzling experiments that stubbornly refused to fit into what we expect. These few ‘glitches in the matrix’ turned out to be what shook the very foundations of Physics, our understanding of the universe, and reality.

These were not very complex measurements: the simple fact that hot objects glow was all understood until scientists managed to measure the colour of the glow at very high temperatures – this led to what is known as the ‘ultraviolet catastrophe’, expectation and observation could not differ more. Another simple example is the photoelectric effect, where shining light on a piece of metal releases electrons. It worked, but far from how we thought it would. To make matters worse, both of these measurements were in perfect agreement with a theory that assumed light was made of particles.

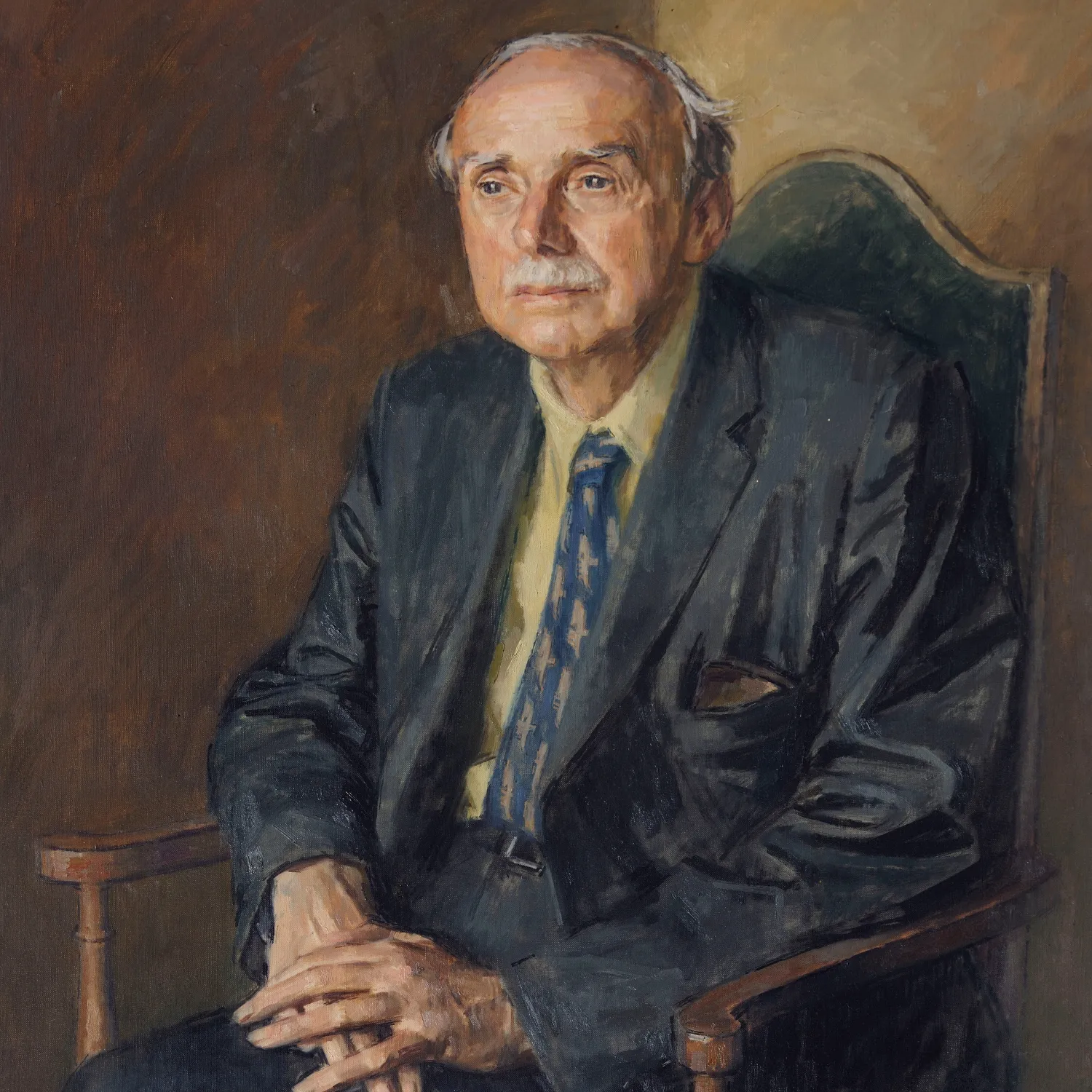

This was the starting concept of what would become quantum physics to be developed within next few years. By the 1920s we had de Broglie’s wave-particle duality for matter and light, Schrödinger’s superposition principle, and Heisenberg’s uncertainty relations all conspiring to challenge the 19th century determinism and establishing themselves as the new reality. Our very own Paul Dirac (1923) had unified special relativity and quantum physics via his famous and elegant equation, which already predicted the existence of ‘antimatter’ – initially assumed to be a mathematical construct only to be measured four years later in 1932.

"Something that would take a current computer until the death of the universe to work out could potentially be done in under a day by a quantum computer"

What followed on has been a century of scientific discovery: 1940s saw the development of quantum electrodynamics by the likes of Feynman, Tomonaga, and Schwinger, offering a strong theoretical framework to explain how light and matter interact. 1960s brought the obscure notions of entanglement and nonlocality to the spotlight of observable entities by John Bell. In 1981 at a keynote speech, Feynman suggested that quantum physics can offer an advantage in solving computationally hard problems. This suggestion is now taken as the onset of quantum technologies, as we look to find applications where quantum physics may offer useful technological opportunities.

My own area of research is in the development of light-matter interfaces that could help us build scalable quantum networks, as well as quantum-enhanced sensors in the nanoworld. On the one hand, using quantum electrodynamics, we control the interaction of localised single electrons and single photons, both treated as quantum bits, or qubits, to create a distributed network of quantum devices that leverage nonlocal entanglement as are source to link multiple quantum computers. On the other hand, we investigate how these spin-photon interfaces behave in complex environments such as the inside of a live cell, to be able to use them as local sensors to the biological dynamics with enhanced sensitivity and resolution.

This year we are celebrating the centenary of quantum science where there will be many celebrations worldwide. The UK is particularly happy to celebrate not only the legacy of the last 100 years, but also the vision of being one of the earliest pioneer nations in establishing a national trust called the UK National Quantum Technologies Programme, aiming to deliver applications of quantum physics to the benefit of our society. The programme has been going on for 10 years and has already created an exciting ecosystem of physicists, engineers, and industrialists, as well as other stakeholders of the society to push the frontiers of scientific translation to future technologies.

Given the very counter-intuitive nature of quantum physics, it is hard to predict the exact impact areas to expect. That said, in the next two decades we are likely to see a few areas of impact. First, there will be a step-change in the simulation of the complex, ranging from designing new materials to tackle climate crisis to more efficient design and synthesis of drugs for healthcare. However, even the topic of what a quantum computer can solve is in itself a field of research, because we still don’t know all the applications for which a computer like this could be used.

"Quantum mechanics will continue to disrupt our understanding of reality, but we should see this as a positive"

The total data storage capability existing in the whole wide world today is estimated around 200 zettabytes, that is the number 2 followed by 23 zeroes. The full quantum physical treatment of an ideal 81-qubit quantum computer corresponds to 40,000 zettabytes. So, on some problems, something that would take a current computer until the death of the universe to work out could potentially be done in under a day by a quantum computer. We have already crossed the 1,000-qubit threshold in 2023, albeit not performing at the full capacity offered by quantum physics, so we may be less than a decade away from a machine with this kind of power.

Quantum mechanics will continue to disrupt our understanding of reality, but we should see this as a positive. Understanding the rainbow doesn’t make it less beautiful, understanding soundwaves doesn’t make you enjoy jazz any less. And so what science should continue to do is translate some of that joy of understanding to all the members of our society. That's where science communication comes in. It is therefore an outstanding opportunity that The United Nations has declared 2025 to be the International Year of Quantum Science and Technology. I hope this centenary will provide a good place for discussions to arise about the future benefits of this science.

Heisenberg’s theory (1925) His matrix mechanics introduces a consistent theoretical formulation of quantum mechanics, redefining our understanding of observables and the action of measurement

Schrödinger’s waves (1926) Schrödinger's wave equation set the stage for the treatment of matter as waves, revealing the probabilistic nature of the behaviour of particles and shedding light into the electronic orbitals in atoms

Schrödinger’s Cat (1935) While this ‘thought experiment’ was to illustrate the paradoxes of quantum superposition and measurement concepts to add to the ongoing debates about the interpretation of quantum mechanics, operational advantage and resilience of 'cat states' have already been demonstrated for superconducting quantum computers

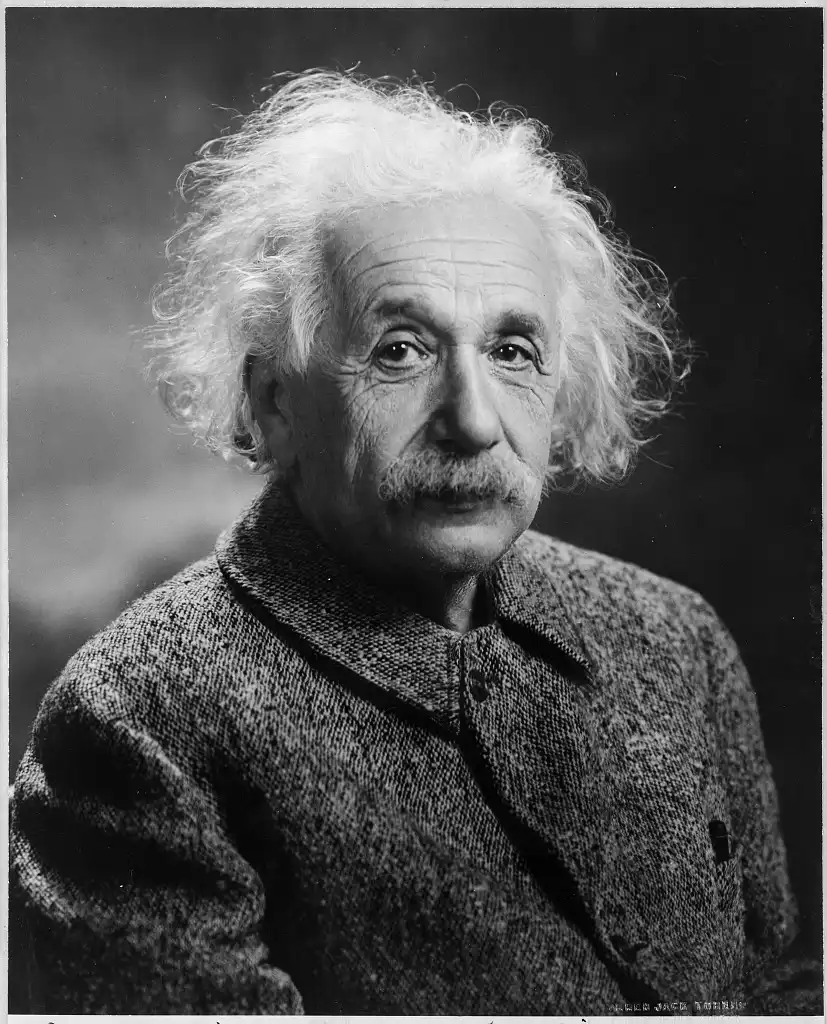

Einstein, Rosen and Podolsky – spooky action at a distance (1935) This seminal EPR paper challenged the completeness of quantum mechanics and nonlocality of entanglement. Bell’s theorem in 1964 put this challenge in experimentally measurable terms, which not only have been proven to be correct experimentally but are routinely used today to check that distant qubits are nonlocally entangled

Meitner and Hahn discover nuclear fission (1938) Revealing the critical role quantum mechanics plays even in the topic of nuclear fission, they revealed the macroscopic power and reach of this new theory not to be ignored

Feynman et al, enter QED (1950) The introduction of quantum electrodynamics by unifying quantum mechanics and special relativity in describing light-matter interactions, became a cornerstone of modern physics and has led to the framework for quantum optics and the standard model for particle physics

Hugh Everett – many-worlds interpretation (1957) Everett followed the footsteps of the early developers of quantum physics with the thought experiments and his many-worlds interpretation proposing that all quantum physical measurement outcomes occur in infinite parallel universes

Feynman strikes again, quantum simulation (1981) During a keynote speech in 1981, Feynman suggests that quantum systems can simulate nature most efficiently, as nature itself is quantum. This introduces the concept of quantum simulation and is taken as the dawn of the quantum technologies

The first quantum computer arrives with two quantum bits (1998) The first two-qubit quantum computer solved the Deutsch’s problem and demonstrated the physical viability of quantum processors, initiating experimental progress towards quantum information processing and computing

Pan et al, Quantum encryption in space! (2016) Jian Wei Pan and colleagues, leading the Chinese quantum technologies efforts in secure communications, demonstrate the first satellite-based quantum key distribution via the Micius satellite and two ground bases, proving the feasibility of global-scale secure quantum communication

Google gains quantum advantage with a 53-qubit quantum computer (2019) With the leadership of John Martinis and team, Google’s 53-qubit superconducting quantum processor achieves quantum advantage in a specific task over conventional approaches to computing, marking a milestone in demonstrating the usefulness of quantum machines over classical counterparts

Lukin et al and the quantum processor with logical qubits (2023) Following the 2016 demonstration of the first proof-of-concept of an error-corrected logical qubit, scalable logical qubits is demonstrated in2023 by Mikhail Lukin and colleagues, who developed a quantum processor with 48 fully functional logical qubits, formally starting the era of fault-tolerant quantum computing

This article was originally published in The Eagle 2025